This post describes an implementation of a deep learning semantic segmentation model to solve the Kaggle ship detection from aerial imagery challenge. This implementaton uses fast.ai and is based on the camvid example. It is modified to work for binary classification instead of multiclassification. In this project I employed fast.ai novel techniques:

*Finding the initial learning rate

*Utilizing pre training, which is built into the Learner

*Freezing and unfreezing layers

This post highlights one crucial step where I needed to subclass a fast.ai standard - an ImageData Object - to load training data for binary classification. With the release of new fast.ai versions, I understand this subclassing process - which I found to lightly documented - will change.

The appeal of fast.ai for me is the ability to prototype quickly. I also especially like that one can subclass the standard libary. I plan to maintain my own fork in the future.

Required Before Starting:

For any deep learning task, you will eventually need a GPU enabled environment to train you network. However, for setting up your experiment (i.e. tasks like reading and viewing samples of your data, creating your labels, preprocessing files, tuning some parameters, etc.) you do not need to be running and paying for a GPU instance. Fast.ai has instructions for setting up an AWS GPU-enabled EC2 instance here: https://course.fast.ai/start_aws.html, but for setting up a project I prefer Google Colab.

Download the contest training data (or a subset!) from https://www.kaggle.com/c/airbus-ship-detection and save it to your file system. Kaggle datasets often 1) contain extra files you do not need (here is a solution for specifying specific files in Kaggle CLI here) and 2) have folders jammed with 10s of GB of files, which is more than you probably need when initially setting up your project. Only the most serious rounds of training will require the full dataset.

The full notebook is available here on github. It uses a subset of the contest training data hosted in an open S3 bucket, which is enough to demonstrate preprocessing and training but not enough to train a working model. Open and run it in Google Colab!

Import libraries and set path variable to data dir

1 | from fastai.vision import * |

Read labels, create validation set.

In this semantic segmentation challenge, we eventually must determine which pixels in images are ships.

The fast.ai image data learner requires the training set and label set resemble image files. Often semantic segmentation data is distributed this way. In this challenge, the labels (“masks”) are distributed in run length encoded format (RLE) stored in a csv file. We read the file, for each encoded mask we write an image file and save it.

1 | label_df_raw = pd.read_csv(f'{DATA_DIR}/train_ship_segmentations_v2.csv', low_memory=False ) |

For each mask in RLE format, use the fastai open_mask_rel function to read data to image-like format, save it in label/ folder, so is can be later read by the Learner.

1 | """## Write masks to jpgs in /labels""" |

Our training and label folders contain image files with corresponding names (i.e. train/432.jpg corresponds to label/432.jpg). To designate a validation set to the learner, we can pass it a list of image file names.

1 | """## Move some ship files to valid.txt""" |

get_y_fn is needed in the datablock api - it says for a training image train/432.jpg, the label is label/432.jpg.

1 | #this function is need in our model, for a given training image, based on the file name of the image it will look in the corresponding labels directory for the |

it is helpful to check the output of the get_y_fn. Fast.ai image library includes practical tools for checking data in a notebook cell.

1 | #check output |

Set parameters for datablock API

1 | size = src_size//4 |

Key step: Fast.ai will read the label images written above. In the label images each pixel is 0 (no ship) or 1 (ship), but the ImageClass open() function will see 0 for no ship or 255 for ship. We override the function by 1) creating a subclass, and 2) reimplement open() to load 0 or 1, which can be accomplished using open_mask() with param div=True.

The idea for this was here https://forums.fast.ai/t/unet-binary-segmentation/29833/40

1 | # subclassing SegmentationLabelList to set open_mask(fn, div=True) |

Run datablock API to bundle data and specify training methods for model.

1 | bel_from_func(get_y_fn, classes=codes)) |

Some notebook commands to inspect the newly created data object

1 | data |

Training utilities from fast.ai examples for learner

1 | """## Create Learner""" |

Create learner to creates and train our model. We use the U-net learner (pre-trained on Resnet 34, this is a fast.ai specialty where they created the U-net downcycle from a pretrained Resnet34!). We also pass in the data object and loss functions.

1 | learn = unet_learner(data, models.resnet34, metrics=metrics) |

Next we start training, anybody who has taken some fast.ai will recognize the standard technique of using lr_find to identify the proper learning rate.

1 | learn.lr_find() |

Next we perform an initial round of training with most layers frozen.

1 | learn.fit_one_cycle(3, max_lr=lr) |

We follow this by training the entire network end to end.

1 | learn.unfreeze() |

That is enough training for now. The linked notebook is a full example including code for 1) performing a second round of training on a dataset of larger images, a technique known as progressive resizing and 2) running inference, which is needed to generate predictions on the Kaggle contest test data and then submited, evaluated, and scored.

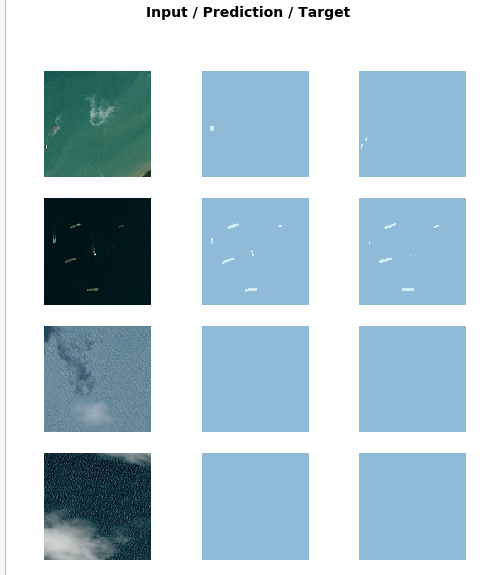

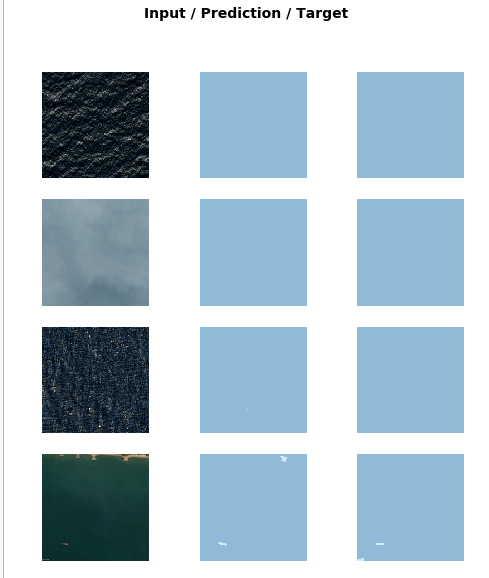

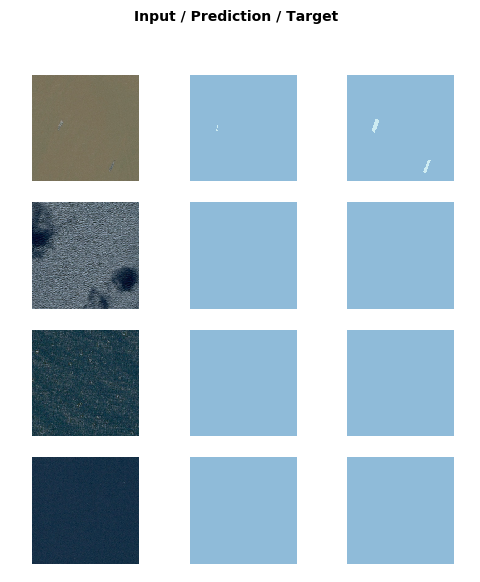

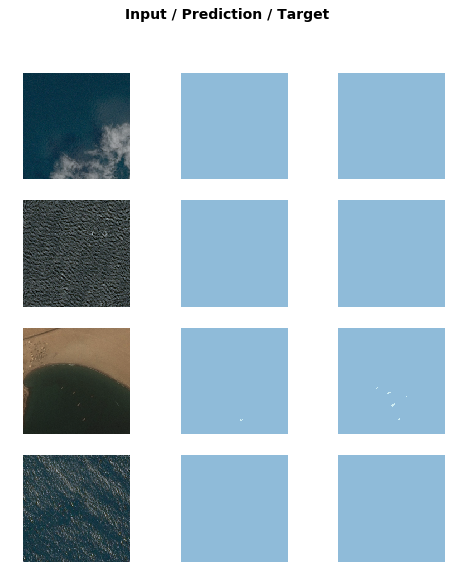

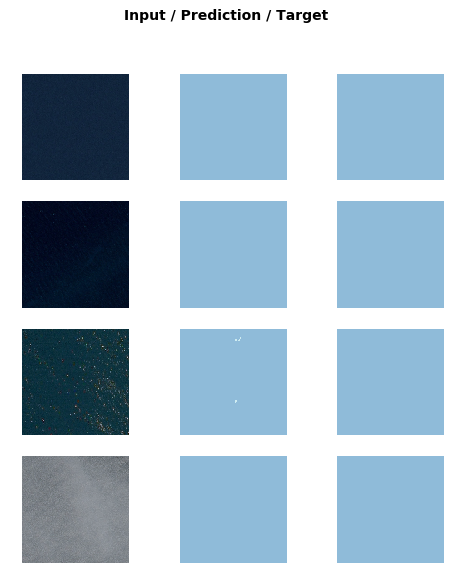

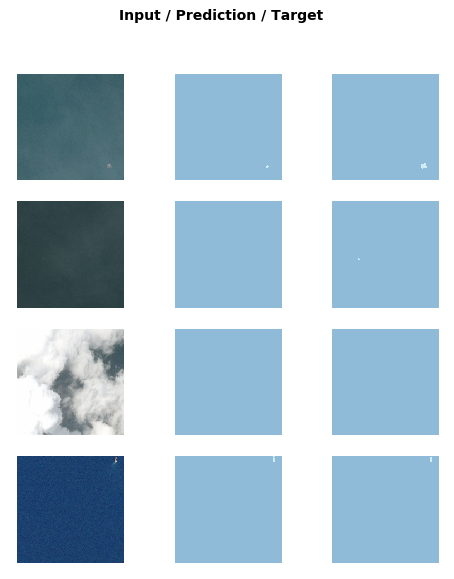

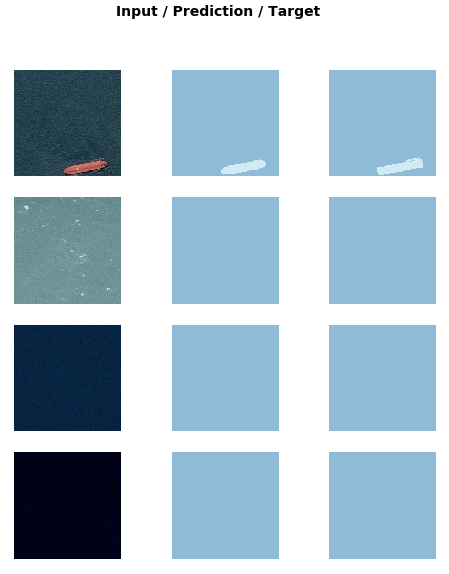

Results